Generalization

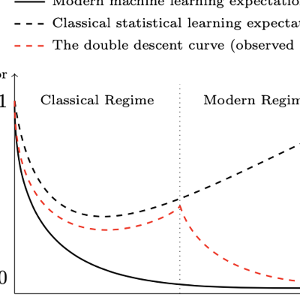

Generalization in Deep Learning refers to a model's ability to perform well on unseen data by learning underlying patterns rather than memorizing training examples. The current view of generalization in deep learning is shaped by the phenomenon of double descent, where increasing model complexity initially leads to worse performance but eventually improves generalization as models become highly overparameterized. Generalization is also closely tied to implicit bias, as optimization processes and model architectures naturally favor simpler solutions, enabling deep networks to generalize well despite their capacity to overfit.