Expressivity

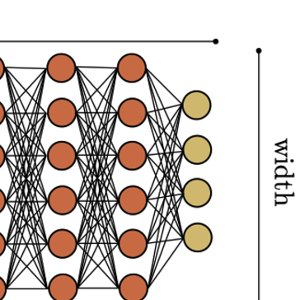

Deep Learning models have transformed numerous application domains, yet their remarkable adaptability and success raise fundamental theoretical questions. A key problem in deep learning lies in understanding the expressivity of neural networks. To that end, we study the expressive power of various architectures and neuronal models, including feedforward, recurrent, self-attention, and spiking neural networks. Our focus is on analyzing their approximation properties and investigating how factors such as depth, width, and activation functions influence their representational power, with the goal of identifying principles for designing efficient and versatile models.