Explainability

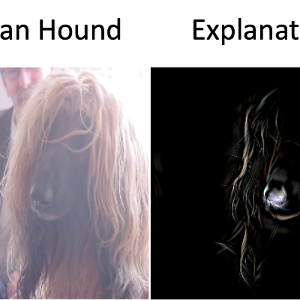

As artificial intelligence increasingly permeates high-stakes decision-making environments like healthcare, finance, and judicial processes, XAI emerges as a pivotal field dedicated to rendering algorithmic reasoning transparent, interpretable, and accountable. Researchers and practitioners in XAI develop methodologies—ranging from model-agnostic techniques like LIME and SHAP to intrinsically interpretable models—that transform black-box computational processes into comprehensible narratives. The fundamental mission of XAI is to bridge the communication gap between advanced computational systems and human understanding, ensuring that AI's decision-making mechanisms can be scrutinized, validated, and trusted across diverse professional and ethical contexts.